Some of us may affected by this or not, after updating minio to RELEASE.2022-10-29T06-21-33Z the minio fail to run. Why? minio ripped out old code backend, as per release notes

Removes remaining gateway implementations of NAS/S3 and legacy FS mode completely - refer PR

Existing users should continue to use their current releases if they wish to continue using gateway mode. Subscribed customers get downstream support until they are subscribed as per the terms of the subscription.

So my current state after updating to latest minio release was, pretty much borked :

ERROR Unable to use the drive /data:

Drive /data: found backend type fs, expected xl or xl-single: Invalid arguments specifiedAfter looking around the issue thread scheduled removal of MinIO Gateway for GCS, Azure, HDFS, if we deploy minio after june cmiiw likely you will not get this issues because it already become default distributed mode on new deployment. Then what can we do? at least two things that we can do, stay at previous release RELEASE.2022-10-24T18-35-07Z or migrating to distributed mode. Pinning tag become the easiest way, but again we will not get further bugfixed or improvement.

My current compose.

version: "3.8"

services:

s3:

image: quay.io/minio/minio:latest

command: server /data --console-address ":9001"

#restart: always

user: 1000:1000

environment:

- MINIO_ROOT_USER=minioadmin

- MINIO_ROOT_PASSWORD=minioadmin

ports:

- 9000:9000

- 9001:9001

volumes:

- /etc/localtime:/etc/localtime:ro

- ./data-standalone:/data

networks:

- minio

networks:

minio:

name: minioThe curse of :latest tag, break our minio... Then we edit tag to older version, and redeploy our container again.

version: "3.8"

services:

s3:

image: quay.io/minio/minio:RELEASE.2022-10-24T18-35-07Z

command: server /data --console-address ":9001"

#restart: always

user: 1000:1000

environment:

- MINIO_ROOT_USER=minioadmin

- MINIO_ROOT_PASSWORD=minioadmin

ports:

- 9000:9000

- 9001:9001

volumes:

- /etc/localtime:/etc/localtime:ro

- ./data-standalone:/data

networks:

- minio

networks:

minio:

name: minioAnother way is migration, in simple terms:

- Stop current minio

- Create new minio with empty data

- Sync minio data bucket from old one to new

This method had some caveat, we will need at least twice size disk space, old minio data + new minio data, then i dont find any way to import/export user permission, thanks fully my installation was simple/minimal so we can just create user manually by minio console.

First of all stop our minio deployment, using previous compose we will use new data.

version: "3.8"

services:

s3:

image: quay.io/minio/minio:RELEASE.2022-10-24T18-35-07Z

command: server /data --console-address ":9001"

#restart: always

user: 1000:1000

environment:

- MINIO_ROOT_USER=minioadmin

- MINIO_ROOT_PASSWORD=minioadmin

ports:

- 9000:9000

- 9001:9001

volumes:

- /etc/localtime:/etc/localtime:ro

#- ./data-standalone:/data

- ./data-new:/data

networks:

- minio

networks:

minio:

name: minioThis will reinitialize our disk to new minio distributed mode, we can check at /data/.minio.sys/format.json

{"version":"1","format":"xl-single","id":"68511917-b9aa-4e08-b286-d474f328d92b","xl":{"version":"3","this":"48a29430-4b1d-4f18-8f12-9714ae8264e0","sets":[["48a29430-4b1d-4f18-8f12-9714ae8264e0"]],"distributionAlgo":"SIPMOD+PARITY"}}Now we start to migrate, we need mc minio commander, it a tool like minio version of aws-cli.

Downlad the mc

$ curl https://dl.min.io/client/mc/release/linux-amd64/mc -o ./mc

$ chmod +x ./mc

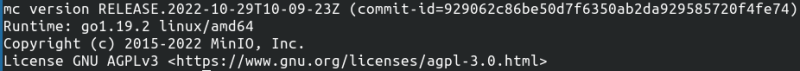

$ ./mc --versionThen we create alias for our new minio deployment,

$ ./mc alias set minionew http://minio-ip:9000 minioadmin minioadmin

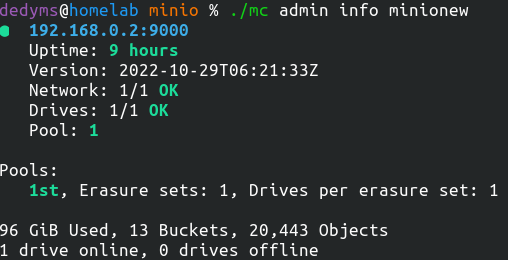

$ ./mc admin info minionew

ALIAS is a name to our minio installation. Adjust ip port with your installaton, on default using port 9000

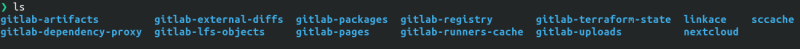

And we are pretty much ready to migrate. Now we go to our old minio installation data there will be folder of bucket name with .minio.sys.

My bucket list:

Then we need to create every bucket for our migrations, let take sample gitlab-artifacts

$ ./mc mb minionew/gitlab-artifactsYou can create all the bucket first and migrate it up.

Lets sync it up

$ ./mc mirror ./gitlab-artifacts minionew/gitlab-artifactsThis gonna take time depends on data size. If we created all the buckets before, we can queue the sync with &&

$ ./mc mirror ./gitlab-artifacts minionew/gitlab-artifacts && ./mc mirror ./gitlab-dependency-proxy minionew/gitlab-dependency-proxy && ./mc ... ...Take your time and let it finish,

Please take down any services/container that trying to use the minio deployment.

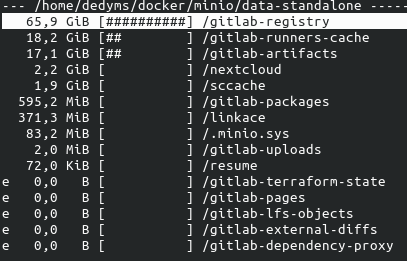

Trivia, my migration size:

sources: